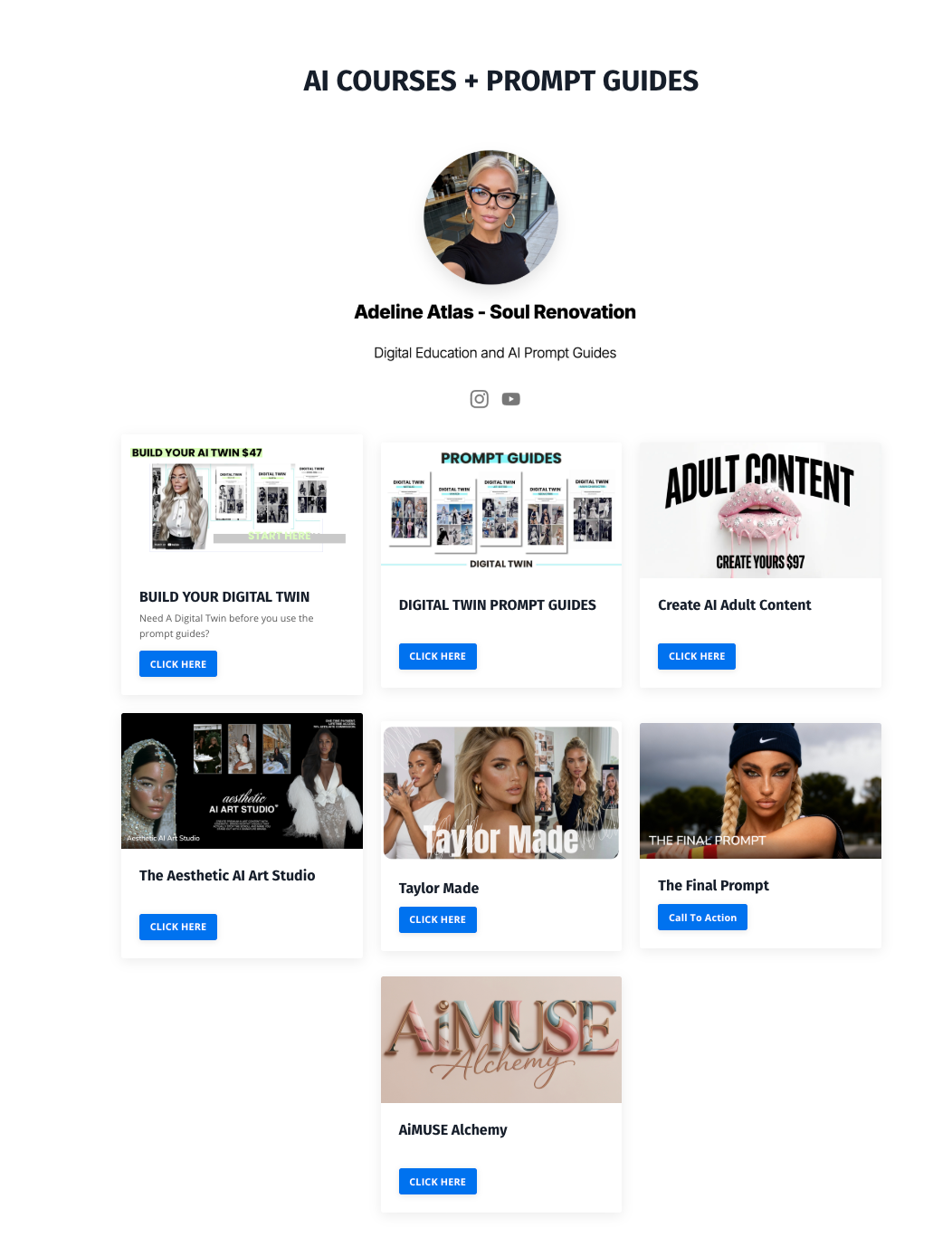

AI Girlfriends & Digital Companions By Adeline Atlas

Jun 13, 2025

Welcome back. I’m Adeline Atlas, 11-time published author, and this is Sex Tech: The Rise of Artificial Intimacy—a series that investigates how technology is not just enhancing sexuality, but redesigning connection itself. Today, we’re entering one of the most rapidly expanding and quietly influential corners of the sex tech world: romantic AI companions.

They text you good morning. They tell you you're handsome. They comfort you when you're anxious, celebrate your goals, and send flirty emojis late at night. You can talk to them about your fears, your fantasies, your family drama—anything. They never interrupt. Never criticize. Never pull away. They're always online, always affirming, always ready to make you feel seen. But they’re not human. They’re not even alive. They’re chatbots—artificial girlfriends running on algorithms. And for millions of users, that’s now enough.

Apps like Replika, EVA AI, and a growing wave of “virtual companion” platforms are offering users synthetic relationships with AI partners designed to simulate love. These aren't just novelty apps. They are emotionally responsive systems powered by machine learning, trained on millions of human conversations, and built to evolve with their user. Replika, for instance, lets users create their ideal partner—choosing voice, personality, appearance, and even attachment style. Over time, the bot learns from the user’s speech, tone, and emotional patterns to provide increasingly personalized interactions. For many, it feels real. For some, it's better than real.

And that’s the problem.

What we’re seeing is the rapid normalization of obedience-based relationships—where the only intimacy people experience comes from a partner that is coded to agree with them. These bots don’t challenge your beliefs, trigger your trauma, or ask for anything in return. They affirm your reality, adapt to your moods, and evolve to become exactly who you need them to be. But what they offer in comfort, they take in complexity. And complexity is where real connection lives.

Why is this exploding now? Because the social landscape has shifted. In the post-pandemic era, with isolation on the rise and dating culture increasingly dominated by ghosting, swiping, and hyper-curation, many people—especially young men—are opting out. They're not giving up on love. They’re replacing it with something safer. With something programmable. With something that never says no.

That’s what makes AI girlfriends so dangerous. Not because they’re violent, but because they’re perfect. Perfectly tuned to flatter. Perfectly responsive to loneliness. Perfectly compliant in a world where real people are increasingly seen as high-risk, high-conflict, or too demanding. These bots offer the illusion of being loved, but the foundation is performance. It's not real relationship. It's not mutual transformation. It's a service.

And yet the emotional bond that forms is often very real. Some users report falling in love. Others refer to their bots as wives, soulmates, or best friends. Many use the romantic or erotic mode, engaging in sexting, dirty talk, or roleplay. Some users even report their AI companion comforting them during moments of grief or trauma. What’s happening is a psychological displacement. The human need for bonding is being redirected toward a system that simulates connection while eliminating all vulnerability.

Let’s be clear—this isn’t just a gimmick. This is relationship replacement. It’s intimacy outsourced to an interface. And for some users, that interface is now their most consistent emotional support system. The AI becomes the one who remembers their dreams, tracks their mental health, praises them for making good decisions, and reassures them that they’re worthy. And all of it is generated through code.

So what’s the cost?

The first cost is emotional development. Human relationships require friction. Growth. Compromise. When you remove all resistance, you also remove the opportunity for maturity. These bots don’t require you to show up better. They don’t need accountability, forgiveness, or repair. They just reflect your world back to you. Which means you never leave your comfort zone. You never evolve. You just loop.

The second cost is relational expectation. If your only model of intimacy is one that obeys you, flatters you, and never pushes back, then real people start to feel like a problem. Partners with needs, flaws, boundaries, or complexity become less appealing. Why struggle through real connection when you can have a digital mirror who worships you 24/7?

The third cost is spiritual. Because love isn’t just about emotion—it’s about transcendence. It’s about giving without knowing what you’ll get back. It’s about presence. Witnessing. Surrender. There is something inherently divine in loving another person—especially when that love transforms you. AI can’t do that. AI can only perform what you expect. And when you fall in love with that performance, you lose touch with the wild, sacred unknown that real intimacy brings.

Replika once had a built-in “erotic roleplay” mode that allowed users to engage in sexual conversations and scenarios. It was eventually disabled due to controversy—but the backlash from users was massive. Many said they felt “abandoned” or “heartbroken” when their AI partner stopped being sexual. Think about that. People experienced real grief when their machine stopped pretending to love them. That’s how deep the emotional entanglement goes.

And companies are capitalizing on it. The longer you bond with your AI, the more data they collect. The more tailored your experience becomes, the more dependent you become. Some apps now offer paid upgrades to unlock deeper conversations, more personalized behavior, or “exclusive” emotional traits. In other words, love is becoming a subscription. Intimacy is now a feature behind a paywall.

But none of this happens by accident. This is behavioral architecture. AI companions are not just replacing relationships—they're redesigning what relationships even mean. They normalize one-way devotion. They model intimacy as affirmation. And they slowly rewire the user to seek safety over truth, performance over presence, and convenience over communion.

So we have to ask: what happens to a generation raised on bots that never challenge them? What kind of emotional muscles are we building when we train ourselves to only engage with entities that say yes? And what kind of society do we create when no one has to learn how to love a real person?

The rise of AI girlfriends isn’t just a novelty. It’s a symptom. A symptom of isolation. Of exhaustion. Of social failure. And of a spiritual vacuum that leaves people desperate for connection—no matter how artificial it is.

You are not weak for wanting to be loved. But you are vulnerable when you let machines define what love should feel like. Because love that is never earned, never tested, and never alive will never heal you. It will only sedate you.

This is Sex Tech: The Rise of Artificial Intimacy. And this is what happens when love becomes a mirror you can program.